Previous findings

Below you can find an overview of some of our previous results, along with the data sets, source code, and publications.

If you have any questions about this work, please write us an email at info@labinthewild.org.

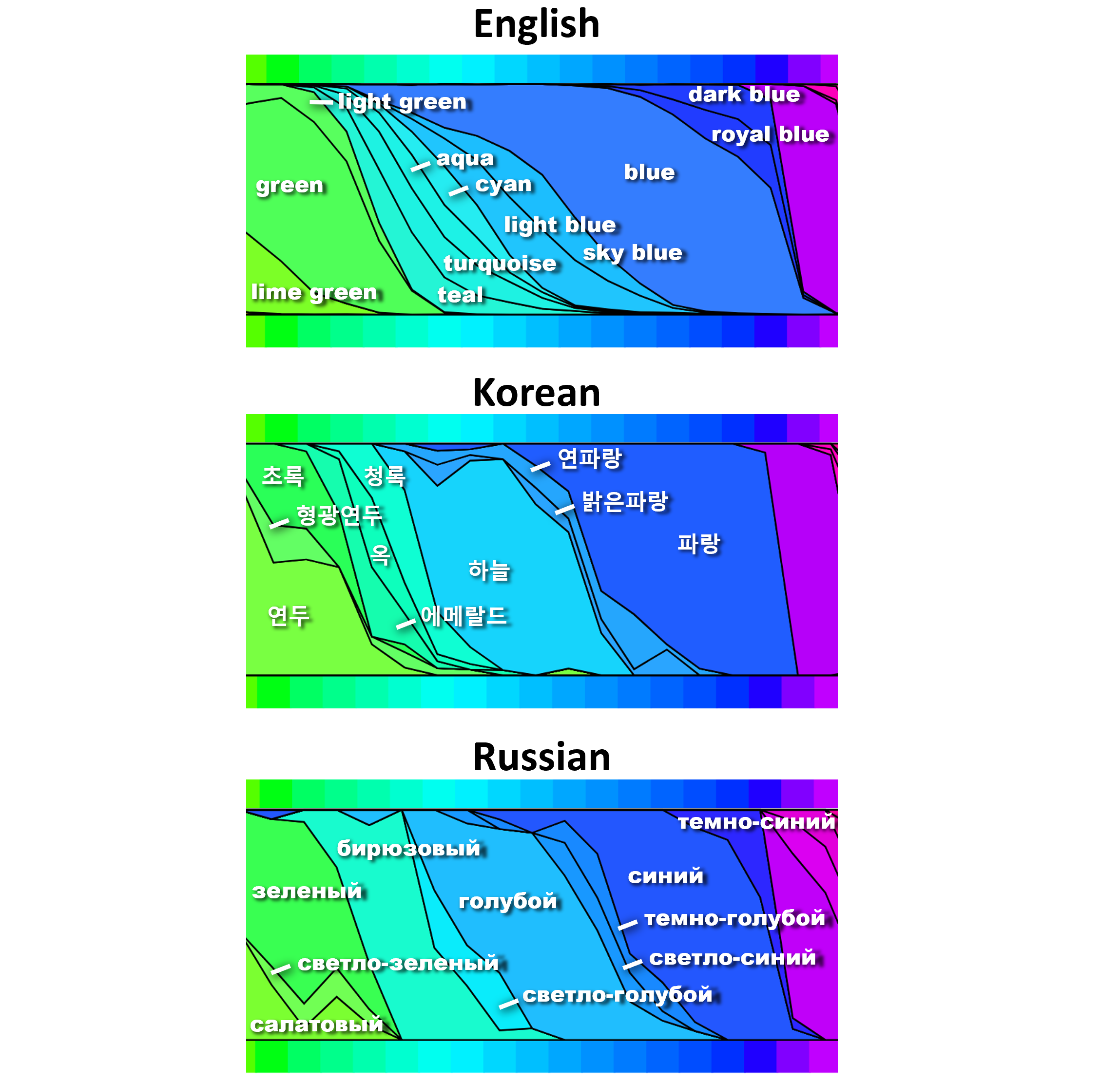

How is the color spectrum divided up differently in different languages?

To answer this question, we collected color names in over 14 common written languages and built probabilistic models that find different sets of nameable (salient) colors across languages. For example, we observed that unlike English and Chinese, Russian and Korean have more than one nameable blue color among fully-saturated RGB colors. These differences in naming can influence how people perceive colors and visualizations.

Publication:

Younghoon Kim, Kyle Thayer, Gabriella Silva Gorsky, Jeffrey Heer. "Color Names Across Languages: Salient Colors and Term Translation in Multilingual Color Naming Models". Computer Graphics Forum (Proc. EuroVis), 2019, 2017. Download the PDF

Data used in this publication:

How do participants currently contribute on LabintheWild and how would they like to do so in the future?

To answer this question, we analyzed more than 8,000 feedback messages from volunteers provided at the end of four LabintheWild studies. We found that many participants ask questions about the research or suggest improvements to the studies. These findings showed that there are several opportunities for involving participants in online experiments beyond simple participation: (1) Participants often want to have learning experiences, such as learning about themselves by interactively comparing their results with others or by learning about the broader research goal, and (2) participants also often desire to be involved in the research itself, for instance by being able to provide feedback on experiments or by suggesting new directions to the research. In summary, designers of online experiments should create opportunities for participants to become citizen scientists.

Publication:

Nigini Oliveira, Eunice Jun and Katharina Reinecke. "Citizen Science Opportunities in Volunteer-Based Online Experiments". Human Factors in Computing Systems (CHI), 2017. Download the PDF

Data used in this publication:

Dictionary containing classes of feedback: PDF.

Dataset: If you are interested in the full dataset used in our analysis, please write an email to info@labinthewild.org.

Thinking Vs Clicking in Modern User Interfaces

Given a choice to either do more clicking or exert more mental effort to get a job done, introverts and people with high need for cognition lean toward more mental effort, while extroverts and people with low need for cognition click more. This suggests that not all people benefit equally from some of the effort saving features of modern user interfaces.

Publication:

Krzysztof Z. Gajos and Krysta Chauncey. The Influence of Personality Traits and Cognitive Load on the Use of Adaptive User Interfaces. In Proceedings of ACM IUI'17, 2017.

[Abstract, BibTeX, Data, etc.]

Data used in this publication:

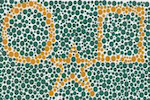

Color vision in digital environments

People often cannot see on-screen content due to challenging lighting conditions. From the teenagers on the sidewalk contorting their bodies to cast shadows over their mobile phones to office workers adjusting their monitor settings for better readability, we are all aware of the impact that lighting, devices, and configuration can have on our ability to see and differentiate colors. However, designers have been larely unsupported when estimating the impact of users' varying color perception on their designs. This is aggravated by the fact that color vision is an inherently individual experience, influenced by both external factors, such as situational lighting conditions, and internal factors, such as possible color blindness. To address this, we have conducted an online color differentiation test on LabintheWild with around 30,000 participants. Based on this data, we developed ColorCheck, an image-processing tool that enables designers to foresee what colors users cannot see.

Publication:

Katharina Reinecke, David Flatla, and Christopher Brooks, "Enabling Designers to Foresee Which Colors Users Cannot See", Human Factors in Computing Systems (CHI), 2016. Download the PDF

Data used in this publication:

Data sets (zip file, 14MB, non-commercial Creative Commons license)

Attitudes about mobile phone use at mealtimes

Mealtimes are a cherished part of everyday life around the world. Often centered on family, friends, or special occasions, sharing meals is a practice embedded with traditions and values. However, as mobile phone adoption becomes increasingly pervasive, tensions emerge about how appropriate it is to use personal devices while sharing a meal with others. Furthermore, while personal devices have been designed to support awareness for the individual user (e.g., notifications), little is known about how to support shared awareness in acceptability in social settings such as meals. In order to understand attitudes about mobile phone use during shared mealtimes, we conducted an online survey on LabintheWild. We found that attitudes about mobile phone use at meals differ depending on the particular phone activity and on who at the meal is engaged in that activity, children versus adults. We were able to show that three major factors impact participants’ attitudes: 1) their own mobile phone use; 2) their age; and 3) whether a child is present at the meal.

Publication:

Carol Moser, Sarita Yardi Schoenebeck, and Katharina Reinecke, "Technology at the Table: Attitudes about Mobile Phone Use at Mealtimes", Human Factors in Computing Systems (CHI), 2016. Download the PDF

Data used in this publication:

Data set (Excel file, 140KB)

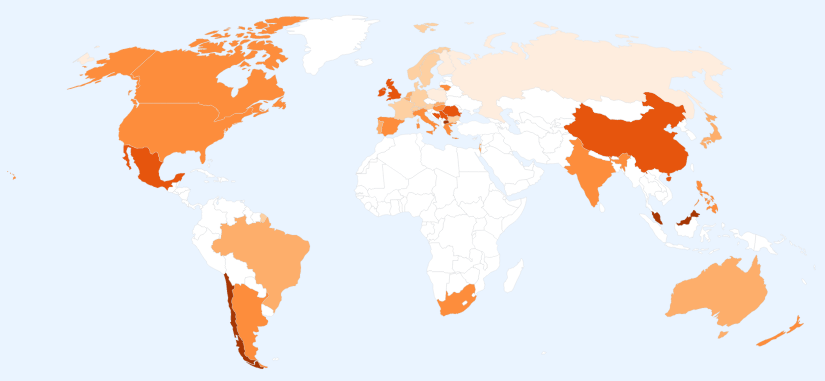

How does LabintheWild work and who are the participants?

When we developed LabintheWild, we wanted to create a platform that is useful for participants and for researchers. We wanted participants to learn something when taking part in our experiments, and we wanted to give researchers a way to study people from varying backgrounds, old and young, from all over the world. This is important, because often research is conducted with participants from Western countries only. So almost two years after launching the platform, we decided to look at where you all come from and why you choose to participate in our experiments.

What did we find?

So many things! We learned that people visit LabintheWild from almost every single country in this world. We know that teenagers, seniors, and everyone in between participates in our tests. From the comments you've left us, we know that many of you enjoy comparing your results to the results of others, but we've also learned that boring tasks are less appreciated (big surprise there!). We were grateful to learn how many participants honestly report being distracted during experiments. Feedback like this is useful for us as researchers, and helps us ensure our results are accurate.

Publication:

Katharina Reinecke and Krzysztof Gajos, "LabintheWild: Conducting Large-Scale Online Experiments With Uncompensated Samples", Computer Supported Cooperative Work and Social Computing (CSCW), 2015 (to appear). 15 pages. Download the PDF

What is your website aesthetic?

When you see a website for the first time, you decide within the blink of an eye—about half a second!—whether you find it appealing or not. In this experiment, we studied how our first impressions of websites differ. Do some people like more colorful websites than others? Are complex websites appealing to everyone?

Our goal is to eventually predict whether someone will find a particular website appealing. We hope that web designers will be able to use this information to build websites that people from all over the world enjoy using.

What did we find?

After asking more than 40,000 people from 200 countries around the world to rate websites on appeal, we found that people's visual preferences for websites mainly depend on their age, gender, country of origin, and their education level. Knowing these details about a person enables us to predict whether that person will find a website appealing at first sight.

If you are interested in exploring the website preferences of people from different countries, we have developed a visualization that walks you through the most exciting differences that we found. Click here to try it out!

Publication:

Katharina Reinecke and Krzysztof Gajos, "Quantifying Visual Preferences Around the World", Human Factors in Computing Systems (CHI), 2014. Download the PDF

Data and source code used in this publication:

Supplementary materials and data sets (zip file, 41MB, non-commercial Creative Commons license)

Website stimuli (zip file, 135MB)

Link to the source code repository for computing visual features (Link to the Bitbucket project)

Explore the results from this research by clicking on the image below!

What do you perceive as colorful and complex?

Our goal in this research was to automatically measure the colorfulness and complexity of website screenshots. Of course, we could have tried to judge the colorfulness and complexity ourselves, but this would have taken a while! Instead, we used an automatic computation to quickly and reliably determine color and complexity statistics for any given number of websites. This gave us an unbiased measure of a website's colorfulness and complexity.

To develop this method, we asked 306 participants to judge the colorfulness and complexity of various websites. We also developed a set of image statistics that we computed based on a website screenshot. These image statistics include, for example, the average saturation of colors on the website, the number of text and image areas, and the distribution of these elements across the site. Using these image statistics, we were then able to develop a method that knows what humans perceive as colorful and visually complex.

What did we find?

The method that we developed can take any website screenshot and determine how colorful and complex humans perceive the site to be. We found that humans usually agree on what they judge to be colorful and complex. This suggests that no matter whether you were born in the US or India, you'll probably think of a simple website as simple, and a colorful website as colorful.

Publication:

Katharina Reinecke, Tom Yeh, Luke Miratrix, Rahmatri Mardiko, Yuechen Zhao, Jenny Liu, and Krzysztof Z. Gajos,

"Predicting Users' First Impressions of Website Aesthetics With a Quantification of Perceived Visual Complexity and Colorfulness", Human Factors in Computing Systems (CHI), 2013. Download the PDF

Data and source code used in this publication:

Supplementary materials and data sets (PDF containing the parameters of the final model)

Website stimuli (zip file, 135MB)

Link to the source code repository for computing visual features (Link to the Bitbucket project)

Copyright © by LabintheWild.org